Projeto 6 – Chamada Assíncrona no BP

Vamos montar nossa próxima integração utilizando o adaptador SOAP Inbound Adapter chamando um BP que vai orquestrar chamadas a dois BOs em modo assíncrono.

Vamos começar criando as mensagens de Request e Response do nosso serviço:

Class ws.credito.msg.Request Extends Ens.Request

{

Property cpf As %String(PATTERN = "1N.N");

}

Class ws.credito.msg.Response Extends Ens.Response

{

Property nome As %String;

Property cpf As %String(PATTERN = "1N.N");

Property autorizado As %Boolean;

Property servico As %String;

Property status As %Boolean;

Property mensagem As %String;

Property sessionId As %Integer;

}

Agora vamos ver o nosso BS, que como mencionado acima usa o SOAP Inbound Adapter:

Class ws.credito.bs.Service Extends EnsLib.SOAP.Service

{

Parameter ADAPTER = "EnsLib.SOAP.InboundAdapter";

Parameter SERVICENAME = "credito";

Method consulta(pInput As ws.credito.msg.Request) As ws.credito.msg.Response [ WebMethod ]

{

Set tSC=..SendRequestSync("bpCredito",pInput,.tResponse)

Quit tResponse

}

}

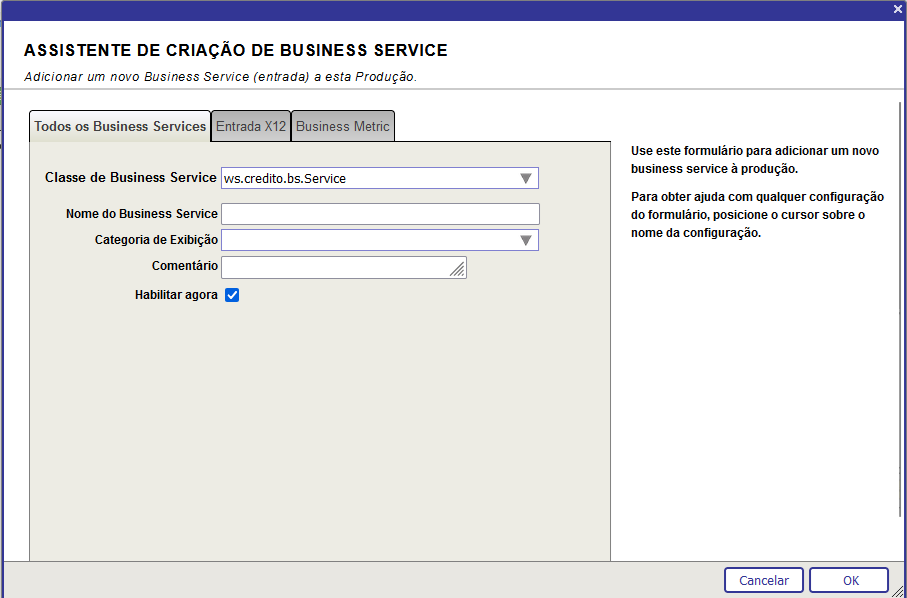

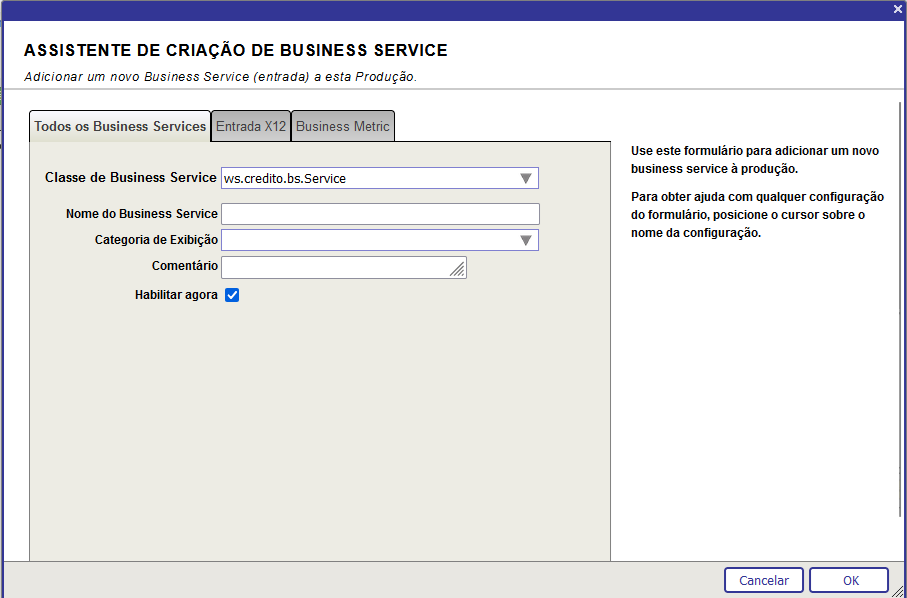

Vamos agora colocar nosso BS na production. Abra o Painel de Administração e vá para a nossa production de teste. Clique no botão (+) ao lado do nome Services e preencha a tela a seguir com os dados apresentados:

Agora volte a production e clique no nome do nosso BS (ws.credito.bs.Service) e veja a configuração dele. Expanda a área Parâmetros de Conexão e marque a caixa Habilitar Requisições Padrão conforme a tela abaixo:

A seguir clique em Atualizar e nosso BS de teste estará pronto.

Vamos agora ver nosso BO. Desta vez vamos colocar o BO na production duas vezes, com nomes diferentes. Cada BO vai simular a chamada a um serviço de validação de crédito com tempos de retorno diferentes para cada requisição. Abaixo o código do nosso BO:

Class ws.credito.bo.Operation Extends Ens.BusinessOperation [ ProcedureBlock ]

{

Method consulta(pRequest As ws.credito.msg.Request, Output pResponse As ws.credito.msg.Response) As %Library.Status

{

Hang $R(10)+1

Set pResponse=##Class(ws.credito.msg.Response).%New()

Set pResponse.nome="NOME DA PESSOA "_pRequest.cpf

Set pResponse.cpf=pRequest.cpf

Set pResponse.autorizado=0

If pRequest.cpf="11111111111"

{

Set pResponse.nome="PESSOA NUMERO 1"

Set pResponse.autorizado=1

}

If pRequest.cpf="22222222222"

{

Set pResponse.nome="PESSOA NUMERO 2"

Set pResponse.autorizado=1

}

If pRequest.cpf="33333333333"

{

Set pResponse.nome="PESSOA NUMERO 3"

Set pResponse.autorizado=1

Set pResponse.status=1

}

If pRequest.cpf="44444444444"

{

Set pResponse.nome="PESSOA NUMERO 4"

Set pResponse.autorizado=1

Set pResponse.status=1

}

Set pResponse.status=1

Set pResponse.mensagem="OK"

Set pResponse.sessionId=..%SessionId

Quit $$$OK

}

XData MessageMap

{

<MapItems>

<MapItem MessageType="ws.credito.msg.Request">

<Method>consulta</Method>

</MapItem>

</MapItems>

}

}

A linha Hang $R(10)+1 vai definir um tempo de espera entre 1 e 10 segundos para as requisições que o BO for atender. Isso é para simular um tempo de resposta no serviço.

Feito o código do BO vamos coloca-lo na production. Primeiro vamos colocar o BO com o nome de boConsultaCreditoABC. Para isso clique no botão de (+) ao lado do nome Operations na nossa production e preencha a tela conforme abaixo:

Depois repita a operação, agora incluindo o BO boConsultaCreditoXYZ:

Pronto. Temos os nossos dois BOs na production. Agora vamos ver o nosso BP:

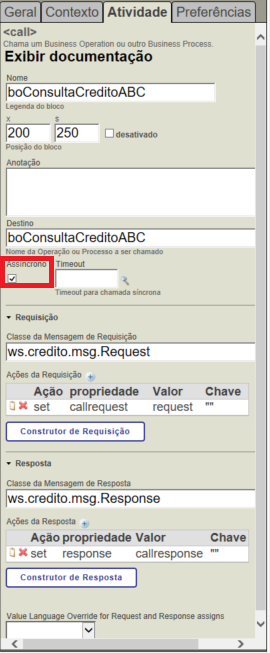

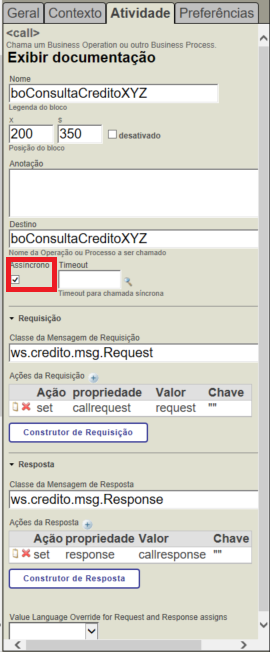

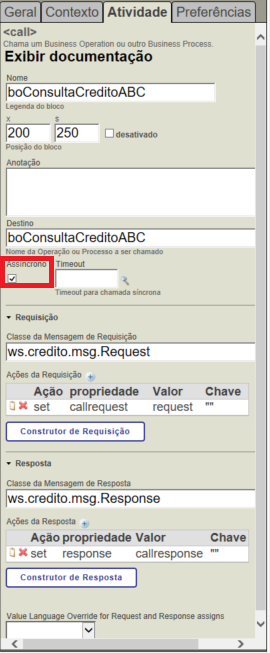

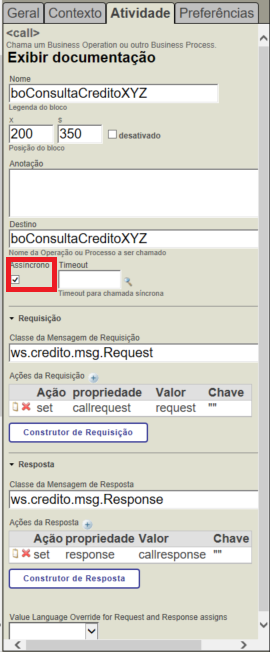

O nosso BP tem agora dois BOs que estão sendo chamados sem espera e depois tem um componente SYNC que aguarda o retorno destas chamadas pelo tempo configurado. É importante verificar que nas chamadas dos BOs (componente CALL) temos que marcar a caixa informado que a chamada será ASSÍNCRONA:

Faça o mesmo para o boConsultaCreditoXYZ:

Agora, no nosso componente SYNC vamos dizer que devem ser esperadas as respostas dos nossos BOs e o tempo de espera:

Na caixa Chamadas informamos os nomes dos BOs a aguardar (boConsultaCreditoABC e boConsultaCreditoXYZ) e na caixa Timeout o tempo de espera (5 segundos). Lembre-se: um BP pode chamar outros BPs ou outros BOs.

O código completo do nosso BP está abaixo:

///

Class ws.credito.bp.Process Extends Ens.BusinessProcessBPL [ ClassType = persistent, ProcedureBlock ]

{

/// BPL Definition

XData BPL [ XMLNamespace = "http://www.intersystems.com/bpl" ]

{

<process language='objectscript' request='ws.credito.msg.Request' response='ws.credito.msg.Response' height='2000' width='2000' >

<sequence xend='200' yend='1450' >

<call name='boConsultaCreditoABC' target='boConsultaCreditoABC' async='1' xpos='200' ypos='250' >

<request type='ws.credito.msg.Request' >

<assign property="callrequest" value="request" action="set" languageOverride="" />

</request>

<response type='ws.credito.msg.Response' >

<assign property="response" value="callresponse" action="set" languageOverride="" />

</response>

</call>

<call name='boConsultaCreditoXYZ' target='boConsultaCreditoXYZ' async='1' xpos='200' ypos='350' >

<request type='ws.credito.msg.Request' >

<assign property="callrequest" value="request" action="set" languageOverride="" />

</request>

<response type='ws.credito.msg.Response' >

<assign property="response" value="callresponse" action="set" languageOverride="" />

</response>

</call>

<sync name='Aguarda Operations' calls='boConsultaCreditoABC,boConsultaCreditoXYZ' timeout='5' type='all' xpos='200' ypos='450' />

<if name='Recebeu ABC' condition='$IsObject(syncresponses.GetAt("boConsultaCreditoABC"))' xpos='200' ypos='550' xend='200' yend='1350' >

<true>

<assign name="Atribui response ABC" property="response" value="syncresponses.GetAt("boConsultaCreditoABC")" action="set" languageOverride="" xpos='200' ypos='700' />

<assign name="Atribui servico" property="response.servico" value=""ABC"" action="set" languageOverride="" xpos='200' ypos='800' />

</true>

<false>

<if name='Recebeu XYZ' condition='$IsObject(syncresponses.GetAt("boConsultaCreditoXYZ"))' xpos='470' ypos='700' xend='470' yend='1250' >

<true>

<assign name="Atribui response XYZ" property="response" value="syncresponses.GetAt("boConsultaCreditoXYZ")" action="set" languageOverride="" xpos='470' ypos='850' />

<assign name="Atribui servico" property="response.servico" value=""XYZ"" action="set" languageOverride="" xpos='470' ypos='950' />

</true>

<false>

<assign name="Atribui CPF" property="response.cpf" value="request.cpf" action="set" languageOverride="" xpos='740' ypos='850' />

<assign name="Atribui status Erro" property="response.status" value="0" action="set" languageOverride="" xpos='740' ypos='950' />

<assign name="Atribui msg Erro" property="response.mensagem" value=""Sem resposta do serviço"" action="set" languageOverride="" xpos='740' ypos='1050' />

<assign name="Atribui SessionID" property="response.sessionId" value="..%Process.%SessionId" action="set" languageOverride="" xpos='740' ypos='1150' />

</false>

</if>

</false>

</if>

</sequence>

</process>

}

Storage Default

{

<Type>%Storage.Persistent</Type>

}

}

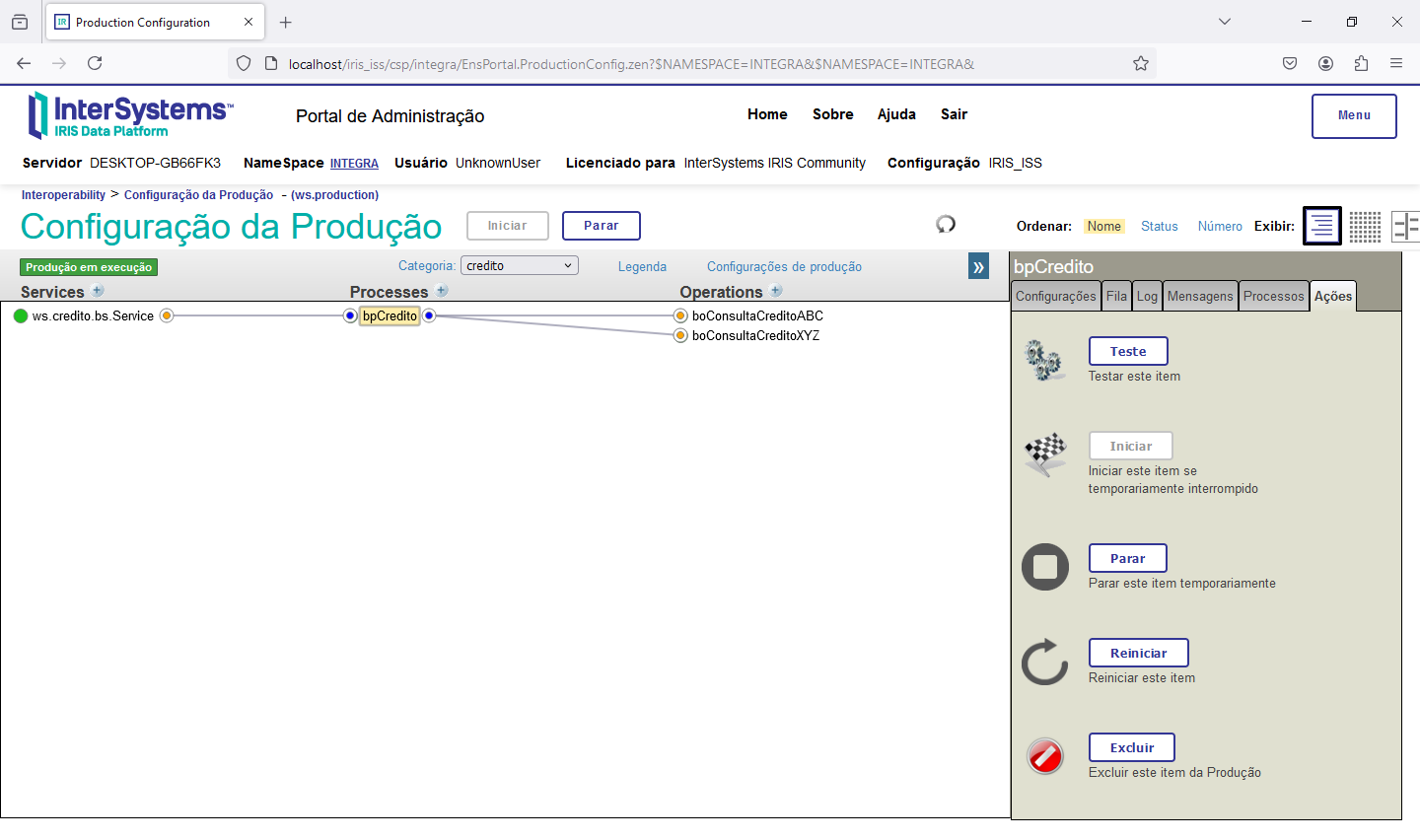

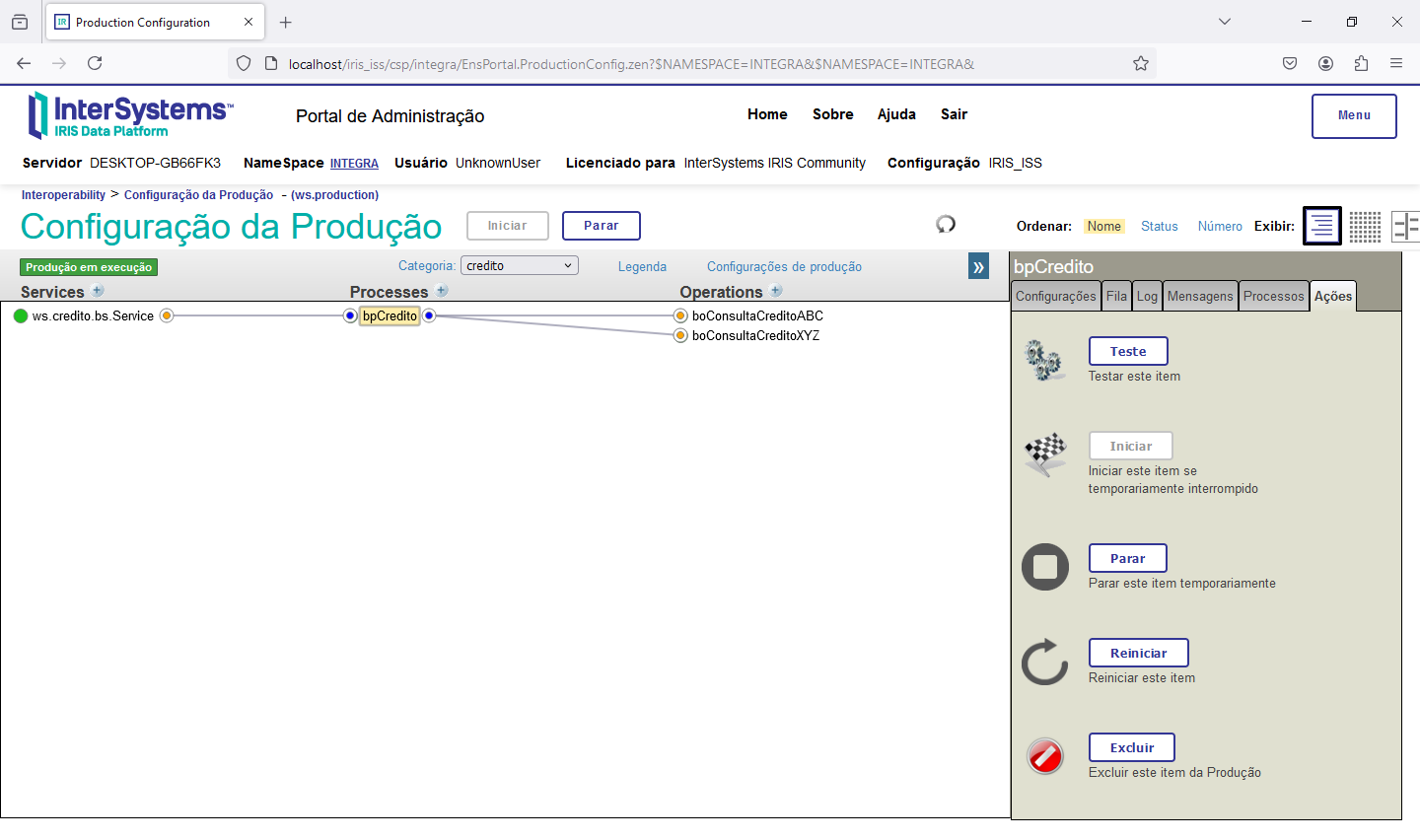

Com nossos componentes na production clique na bola verde ao lado de bpCredito e veja as ligações dos componentes:

Vamos visualizar o WSDL do nosso serviço. Para isso abra o navegador e vá para o endereço http://localhost/iris_iss/csp/integra/ws.credito.bs.Service.cls?WSDL=1 (troque íris_iss pelo nome da sua configuração IRIS e integra pelo namespace onde você está colocando seu código). Você verá uma tela assim:

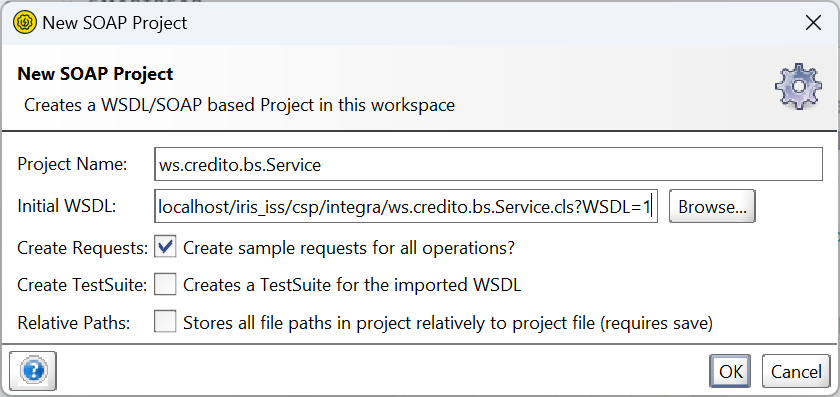

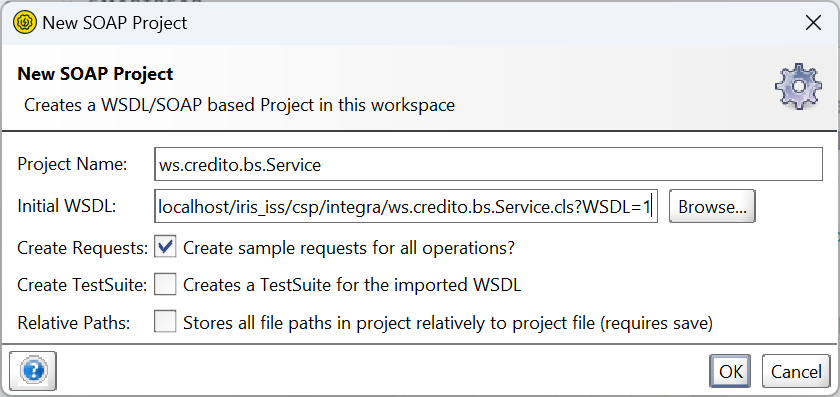

Abra então o SoapUI e importe o WSDL. Vá em File->New SOAP Project e na janela que será apresentada coloque o endereço do WSDL na caixa Initial WSDL e a seguir clique em OK:

Project Name será preenchido automaticamente. Após o OK será disponibilizada a interface de teste do serviço:

Substitua o ? no XML pelo valor do CPF a consultar. Veja no BO as opções possíveis para respostas válidas. Por exemplo, 11111111111:

Veja que recebemos um XML com a resposta do serviço, informando qual BO atendeu nossa chamada:

<?xml version="1.0" encoding="UTF-8" ?>

<SOAP-ENV:Envelope xmlns:SOAP-ENV='http://schemas.xmlsoap.org/soap/envelope/' xmlns:xsi='http://www.w3.org/2001/XMLSchema-instance' xmlns:s='http://www.w3.org/2001/XMLSchema'>

<SOAP-ENV:Body><consultaResponse xmlns="http://tempuri.org"><consultaResult><nome>PESSOA NUMERO 1</nome><cpf>11111111111</cpf><autorizado>true</autorizado><servico>XYZ</servico><status>true</status><mensagem>OK</mensagem><sessionId>2266</sessionId></consultaResult></consultaResponse></SOAP-ENV:Body>

</SOAP-ENV:Envelope>

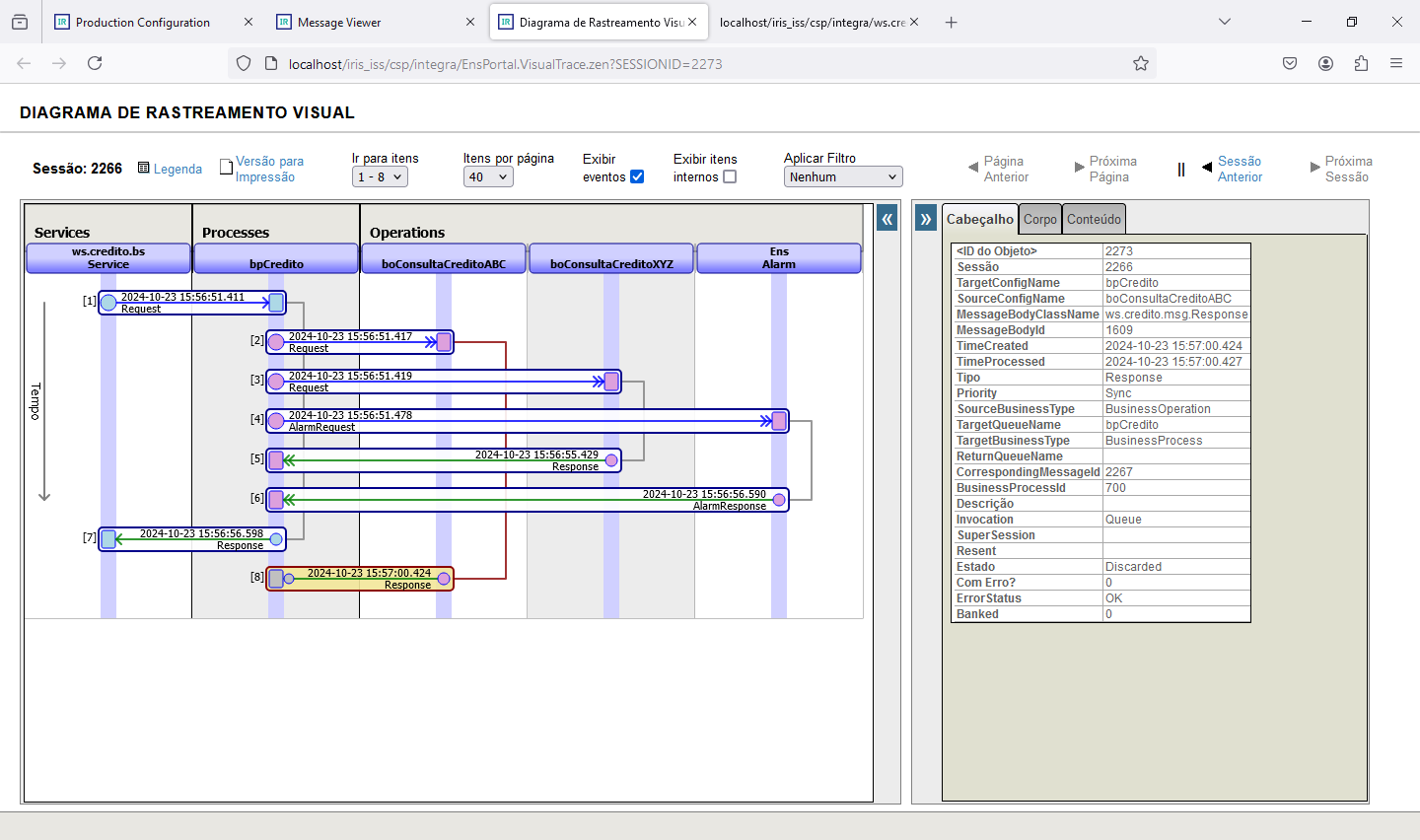

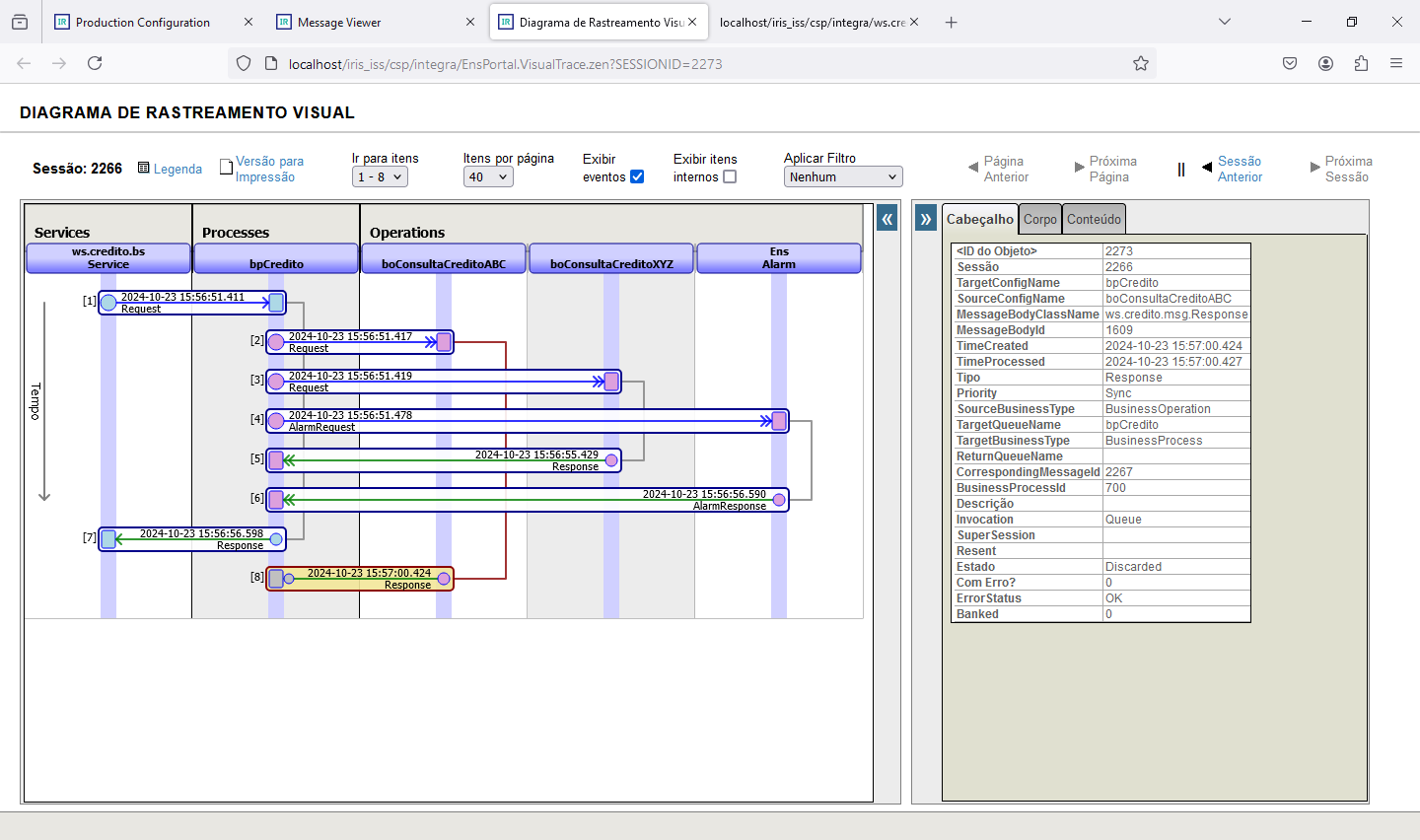

Verificando o trace da integração de SessionID 2266 vemos o que ocorreu:

Pelo trace podemos ver que o Response do boConsultaCreditoXYZ voltou rapidamente, enquanto que o Response do BO boConsultaCreditoABC demorou e voltou apenas depois do tempo de espera do componente SYNC. Ou seja, o componente SYNC aguardou até 5 segundos pelo retorno dos BOs. Uma vez superado o tempo de espera verificamos quais os BOs que responderam e realizamos o tratamento desejado. Veja a lógica nos IFs que temos no nosso BP.

Lembre-se que podemos colocar o TCPTRACE para verificar o tráfego que ocorre entre o SoapUI e o nosso BS, o que é especialmente útil em situações de teste.

Com isso concluímos esta integração. Utilizamos em nosso teste o IRIS 2024.1 que está disponível para download na sua versão Community na internet.

.png)

.png)

.png)

.png)

.png)

.jpg)