¡Hola Comunidad!

Llega un nuevo reto para vosotros ¡El tercer concurso de redacción de artículos técnicos de InterSystems en español ya está aquí!

🏆 3º Concurso de Artículos Técnicos en español 🏆

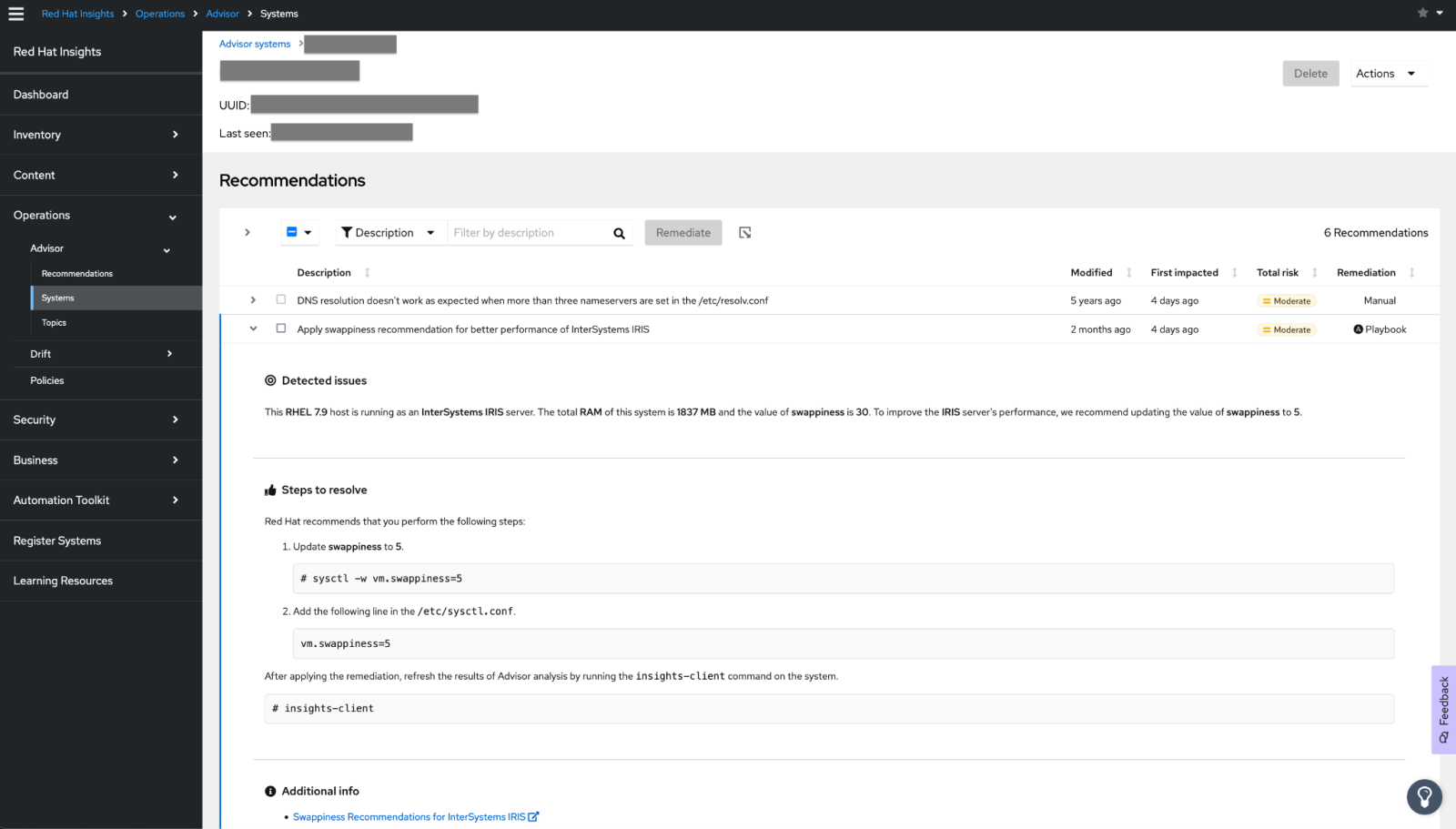

Descripción del concurso: Escribe un artículo en español en la Comunidad de Desarrolladores, sobre cualquier tema relacionado con la tecnología de InterSystems.

Duración: del 6 de mayo al 2 de junio de 2024.

Premios para todos los participantes: Todas las personas que publiquen un artículo en español durante la vigencia del concurso recibirán un premio.

Premio principal: LEGO Ferrari Daytona SP3 / Banco Mágico Gringotts™ - Edición para coleccionistas.

¡Participa en el concurso y alcanza a cientos de usuarios! Es una de las mejores oportunidades para publicar esos consejos que has descubierto.

Premios

1. Todos los participantes ganan en el concurso de artículos técnicos de InterSystems -> Cualquier miembro de la Comunidad que participe en el concurso, recibirá un detalle de InterSystems por participar.

2. Premios de los Expertos – los artículos ganadores de esta categoría serán elegidos por expertos de InterSystems y podrán elegir en cada caso:

🥇 1er puesto: LEGO Ferrari Daytona SP3 o Banco Mágico Gringotts™ - Edición para coleccionistas.

🥈 2do puesto: LEGO Sistema de Lanzamiento Espacial Artemis de la NASA o Chaqueta hombre Patagonia Nano Puff® Hoody.

🥉 3er puesto: Altavoz JBL Flip 6, Amazon Kindle 8G Paperwhite (Onceava generación) o Mochila Samsonite SPECTROLITE 3.0 15.6"

O como alternativa, cualquier ganador puede elegir un premio de una categoría inferior a la suya

Nota: Los premios están sujetos a cambiar si la disponibilidad en el país no permite su entrega.

3. Premio de la Comunidad de Desarrolladores – artículo con el mayor número de "Me gusta". La persona que gane, podrá elegir uno de estos premios:

🎁 Altavoz JBL Flip 6, Amazon Kindle 8G Paperwhite (Onceava generación) o Mochila Samsonite SPECTROLITE 3.0 15.6"

Nota: cada autor solo puede ganar un premio de cada categoría (en total, un autor puede ganar dos premios: uno en la categoría Expertos y otro en la categoría de la Comunidad).

¿Quién puede participar?

Cualquier persona registrada en la Comunidad de Desarrolladores, excepto los empleados de InterSystems. Regístrate aquí en la Comunidad si aún no tienes una cuenta.

Duración del concurso

📝 Del 6 de mayo al 2 de junio: Publicación de artículos.

📝 Del 3 de junio al 9 de junio: Fase de votación.

Publica tu(s) artículos(s) durante ese período. Los miembros de la Comunidad de Desarrolladores pueden ir votando los artículos que les gustan haciendo clic en "Me gusta" debajo de cada artículo.

Truco: Cuanto antes publiques tu(s) artículo(s), más tiempo tendrás para conseguir más votos de los Expertos y de la Comunidad.

🎉 10 de junio: Anuncio de los ganadores.

Requisitos

❗️ Cualquier artículo escrito durante el período de duración del concurso y que cumpla los siguientes requisitos entrará automáticamente en la competición ❗️:

- El artículo debe estar relacionado directa o indirectamente con la tecnología de InterSystems (características propias de los productos de InterSystems o, también, herramientas complementarias, soluciones arquitecturales, mejores prácticas de desarrollo,…).

- El artículo debe estar escrito en español.

- El artículo debe ser 100% nuevo (puede ser la continuación de un artículo ya publicado).

- El artículo no puede ser una copia o traducción de otro publicado en la Comunidad de Desarrolladores en español o en otra Comunidad.

- Tamaño del artículo: >1 000 caracteres (los enlaces no cuentan en el cálculo de caracteres).

- Modo de participación: individual (se permite que un participante publique varios artículos).

¿Sobre qué se puede escribir?

Se puede escoger cualquier tema técnico relacionado directa o indirectamente con la tecnología de InterSystems.

🎯 BONUS:

Los Expertos conceden 3 votos al artículo que consideran el mejor, 2 votos al 2º que consideran mejor y 1 voto al 3º que consideran mejor. Además, los artículos pueden recibir más puntos en función de los siguientes bonus:

Nota: la decisión de los jueces es inapelable.

1. Bonus por autor nuevo: Si es la primera vez que participas en el Concurso de Artículos Técnicos en Español, tu artículo recibirá un 1 voto extra de los Expertos.

2. Bonus por temática: Si tu artículo está dentro de las siguientes temáticas, recibirá 2 puntos extra.

|

|

|

|

|

|

|

3. Vídeo bonus: si además del artículo, se acompaña con un vídeo explicativo, el candidato recibirá 4 puntos.

4. Bonus por tutorial: Recibirás 3 puntos si el artículo tiene características de tutorial, con instrucciones paso a paso que un desarrollador pueda seguir para completar una o varias tareas específicas.

Así que... Let's go!

¡Esperamos ansiosos vuestros artículos!

¡Comunidad! ¡Que la fuerza os acompañe! ✨🤝